The reason you see that kind of

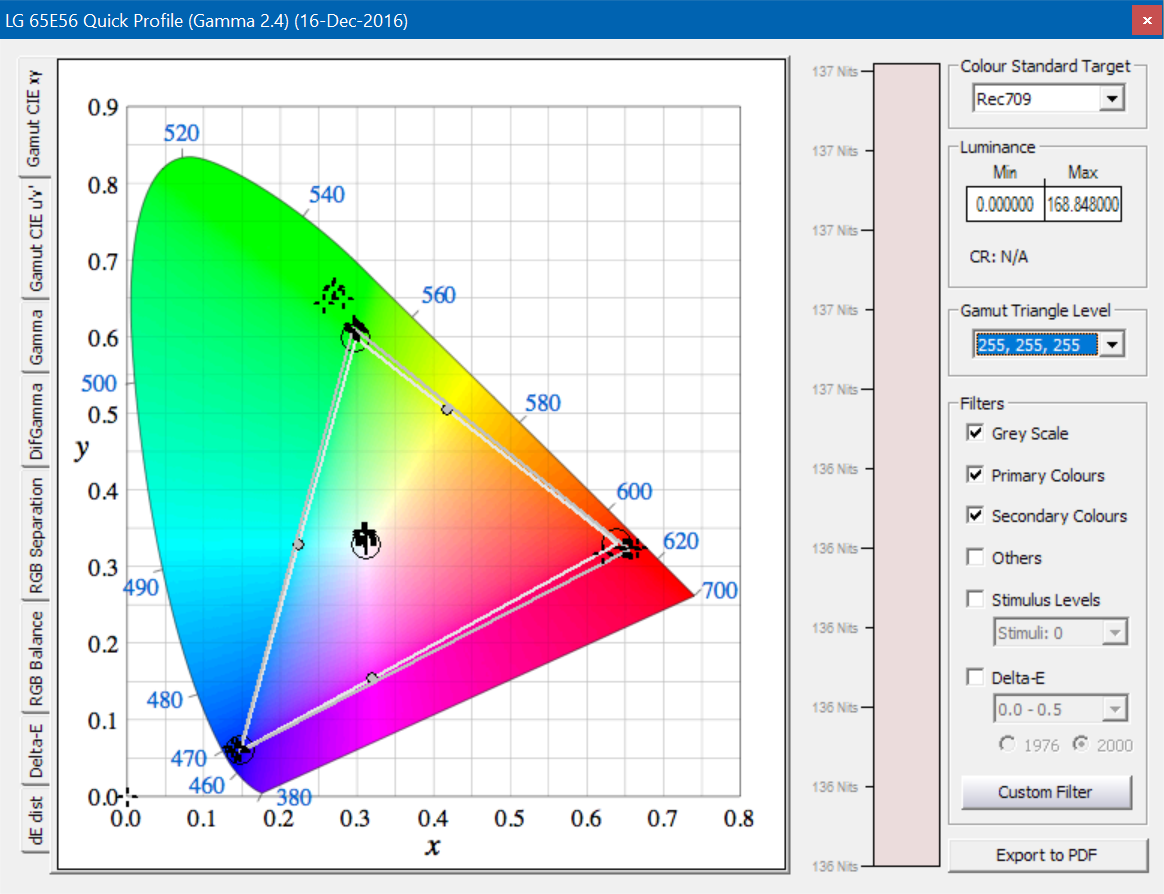

problems using Normal Gamut @ SDR is because LG has an issue in it's Color Gamut mapping, since it's Normal Gamut it's providing a closer gamut coverage to REC.709 (a bit oversaturated)...and the users which calibrates the LG's using that option when they use only the LG's internal calibration controls, the issue is that the tracking of low end colors is expanded to larger coverage, this is something I haven't seen before, seems that the LG engineers haven't programmed well that mapping, see what is happening when you measure the lower luminance levels...this picture shows a 20-Point Luminance Sweep @ 100% Saturation, starting from 100% until 5%. From 25% Luminance and below the colorspace coverage is larger, this will provide non-linear gradients or other problems with strange shades to real picture, like the ones you posted earler

here. Usually that kind of measurements are ignored from the users which measure only a few color points to validate the post calibration results.

This is AGIF Image (Animated GIF), this may playback to all browsers.

This is APNG Image (Animated PNG), this will have animated only to browsers that support Animated PNG playback.

So If you want to get the best possible picture performance you have to use a 3D LUT Box (like eeColor 3D LUT Box which has the largest 3D LUT memory in the global market while it has the lowest price also), pre-calibrated only your 100% White of the LG (using the internal controls) and then let the other thousand color points to be measured by (LightSpace/CalMAN/DisplayCAL/ArgyllCMS) and from the thousand points it will be measured automatically, the 3D LUT correction will be generated for 65-Point Cube (274.625 Color Points).

Here you can see a comparison of color points between internal controls of a display compared to 3d lut cube sizes of various 3D LUT devices.

Using 3D LUT you calibrate many different levels or saturation/hue/luminance, so your performance will be reference at any color, in 8-bit systems, the allocation of 17 nodes per component (17-Point Cube) proves that is best trade off between display/meter/processor hardware / measuring time / display stability and overall quality, that's why that size is commonly used at pro industry. Now by ultra fast meters like Klein K-10A, 21-Point Cube requires less time than before (about 2H 30M in total; with 0.5 sec of delay before each patch read), and it has become the standard used size (in post-production).

The most important is the total volumetric accuracy for the best final results, consumer displays has not so linear tracking to all it's areas, so a large cube with a profiling sequence that 17 or 21-Point Cube (4.913 or 9.261 Color Points) grid-based with equal spaced RGB values will cover all potential colors equally and give the most accurate correction.

This info is for all the users if these beautiful TV's which want to receive the best possible picture for Blu-Ray SDR playback.